This article is intended be a friendly introduction to WebSemaphore and the ways it will help you manage concurrency in your API communication for profit and business continuity.

What is concurrency control

Concurrency control ensures that correct results for concurrent operations are generated, while getting those results as quickly as possible (Wikipedia).

Concurrency management, in particular management of concurrent access to a resource, shows up frequently when dealing with databases, file systems and external resources. The term “resource” is often used in this context to represent anything, be it a service, a device, a software license or a physical space such as a meeting room - as long as there is an API to control it. Some terms that point to concurrency without directly mentioning it are locks, mutexes, synchronization and others.

In the context of modern highly distributed systems WebSemaphore provides a SaaS version of the semaphore construct known from the realm of databases, operating systems and recently cloud environments. To allow for elasticity and flexibility it also combines the traits of a message broker and an integration solution.

Why manage concurrency?

Data consistency

Most frequently and naturally concurrency limits show up when updating data. Imagine two processes that would like to update a database property at the same time, incrementing its value by one. To do this both would read the current value, add one and write the result back. Regardless of whether the action is performed in the database (e.g. with an SQL statement) or outside by some wrapping code, both processes risk using old data and overwriting each other’s results unless special measures are taken.

Capacity/load management

Any given system can process only so many requests at a time. The number may be high but is always finite. As maximum capacity is reached, the system will crash if the load is not slowed down or redirected to another instance. In such scenarios systems become non-responsive and return errors.

Failure protection

Elastically managing capacity in terms of concurrent processes provides clients with protection against failing APIs. If you think about it, a service that doesn’t respond is equivalent to a service with zero throughput. If we can buffer input when the throughput is arbitrarily low, we can also handle the case when there’s no throughput at all.

Explicit limitations

Sometimes concurrency is simply an outcome of a use case. An easy example is a printer which can print only one page at a time. There is simply no way other than not allowing anyone to print when a job is in progress and/or queue the incoming print jobs.

Many present-day APIs have limitations that will not allow a client to exceed a certain number of concurrent requests, specified by the user’s license / SLA.

When WebSemaphore can help

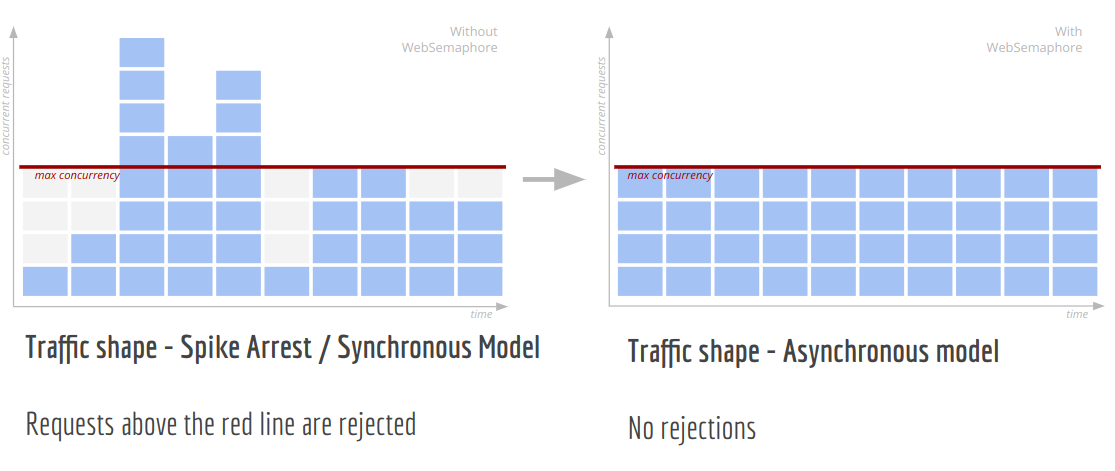

WebSemaphore wraps any API and provides amortization of traffic so that the total count of processes accessing the API doesn’t exceed a preconfigured value.

During request traffic spikes

instead of getting rejected, requests get queued and delivered when current processing is complete.

During outages

If your system goes down, WebSemaphore keeps accepting requests. As soon as you’ve fixed the issue it will proceed feeding the requests in the same order they arrived.

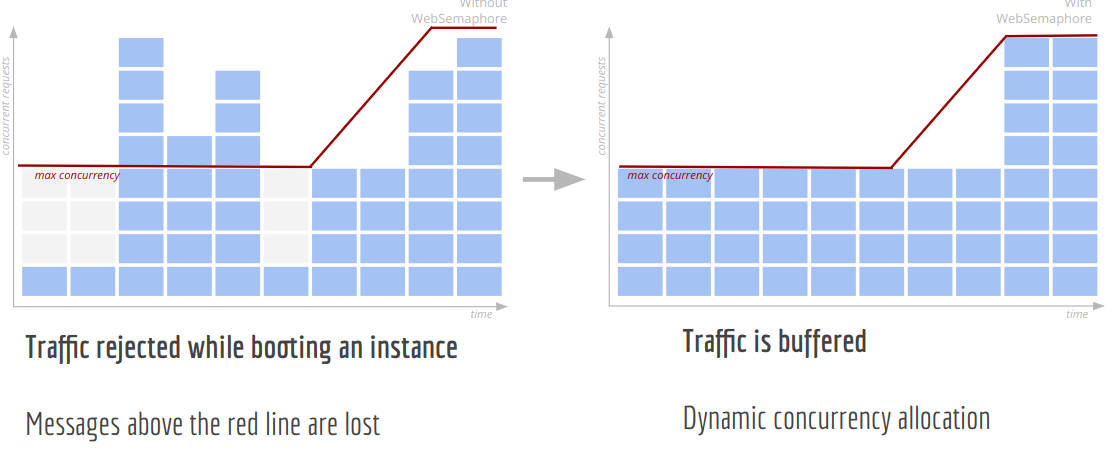

Smooth and efficient scaling of systems

Modern clouds allow easy scaling of the number of instances when demand goes up. Depending on your application and the time it takes to spin up an instance (which may sometimes require human approval), the traffic that comes in the meantime may be rejected. WebSemaphore will keep accepting requests until the new instances are ready. You can then dynamically increase the concurrency limit and process the backlog.

With WebSemaphore, you can take your time scaling up without losing incoming requests. On the other hand, you can scale down when the spike is not over completely and WebSemaphore will let you catch up with the requests during the lower traffic period.

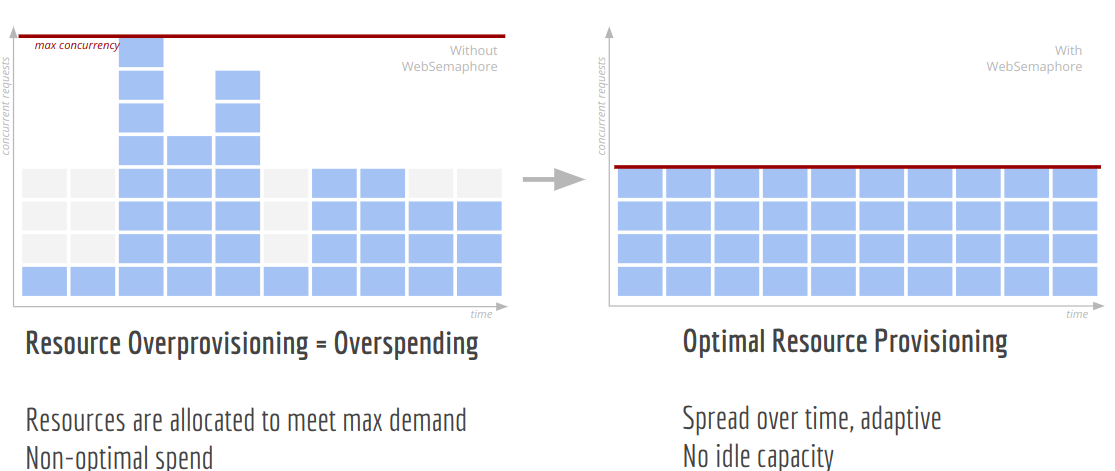

Optimize capacity/throughput

While scaling up is easy with modern providers, it’s also expensive. Planning for maximum capacity is the most performant version while minimal capacity will not hold up to any traffic spikes. WebSemaphore allows planning for average traffic while allowing the resource to be used at optimal capacity at all times.

When the use-case requires

Sometimes a whole flow needs to be performed in such a way that no more than one or N such flows should happen at the same time. This is true even with ephemeral resources such as AWS Lambdas, limited to 1000 per region in a single account.

Why WebSemaphore

Being a cloud-native, SaaS product, WebSemaphore requires zero setup or infrastructure maintenance. We also made the API simple and understandable. Since it works over HTTPS/WebSockets, it can immediately work with almost any stack you are currently using.

To see whether WebSemaphore is something that could work for you please head to https://www.websemaphore.com. If you’d like to chat, use this link to schedule a call.

WebSemaphore is in beta and is actively looking for pilot customers to help shape the product decisions. Get in touch if you are interested.